Navigating the MCP Ecosystem: Security, Strategy, and Custom Implementation

One of the most frequent questions we encounter when discussing the Model Context Protocol (MCP) is straightforward: Does this tool actually support it? The current landscape of MCP adoption is a mixed bag, but one that is rapidly evolving. When looking at popular developer tools, there are promising signs of integration.

Cursor generally offers robust support, which is excellent news given its rising popularity among developers. Similarly, platforms like Postman and Tombow are integrating these capabilities. However, a significant number of tools still lack deep integration, specifically regarding elicitation features.

The Elicitation Gap in MCP

Elicitation is the ability for an interface to ask the user for missing information required by a tool. This is a critical feature of the MCP specification, designed to stop the "guesswork" of Large Language Models (LLMs) by allowing them to ask clarifying questions rather than making assumptions.

While VS Code and Cursor handle this well by creating seamless user interfaces for these interactions, support is variable elsewhere. If your target users live in VS Code or Cursor, you can confidently leverage elicitation to build interactive workflows. However, outside these environments, the user experience can be fragmented.

Copilot Studio and Privacy Concerns

A common friction point arises with Copilot Studio and Microsoft Copilot. Developers often ask how to call a third-party MCP server while keeping the query private within their Microsoft tenant. The reality is complex. To use a third-party MCP server, you generally must expose the question to that server. There is currently no simple method to "mask" the prompt while still retrieving the necessary tool output.

For privacy-conscious enterprises, this is a critical consideration. While usage inside Edge or isolated environments offers some protection, connecting Copilot to external MCP servers requires a careful audit of data flow.

Security in the Age of Autonomous Agents

As we move toward autonomous multi-agent solutions, the conversation inevitably turns to risk. There is a running joke in the industry that the "S" in MCP stands for security, implying that it is often an afterthought. However, for enterprise adoption, security must be the priority.

We recommend looking at the concept of the "Lethal Trifecta" regarding AI security. Significant danger arises when you combine three specific factors:

- Access to private data: The agent can read sensitive information.

- Write operations: The agent has the ability to change, delete, or modify systems.

- Untrusted user input: The agent accepts prompts from external or unverified sources.

If your agent possesses all three of these characteristics, you are in the danger zone.

Practical Mitigation Strategies

To safely deploy MCP-enabled agents, consider these mitigation strategies:

- Read vs. Write Separation: Be extremely cautious about granting write permissions. Reading a private repository is a secure operation; allowing an agent to comment on a public thread with data from that private repository creates a data leak risk.

- Granular Permissions: When creating access tokens (such as a GitHub PAT) for an agent, scope it strictly. Limit the token to specific repositories and specific actions, such as "Issues: Read Only."

- Strict Isolation: Avoid running agents directly on production machines or main development environments. Use Dev Containers, GitHub Codespaces, or isolated VMs. For high-security tasks, we utilize Azure Container Apps dynamic sessions to create completely sandboxed environments that are destroyed immediately after use.

- The "No-Go" List: Configure settings to require human approval for specific commands. If an agent has CLI access, ensure destructive commands (like deleting resource groups) are flagged for mandatory user review.

Building vs. Buying: When to Write Your Own MCP Server

Developers often look to the VS Code marketplace or public registries to find existing MCP servers for tools like GitHub, Postgres, or Datadog. These are excellent resources for prototyping and handling one-off workflows. However, when you need enterprise control, relying on public wrappers may not be sufficient.

Most available MCP servers are simply wrappers around a public API or SDK. If the public GitHub MCP server exposes too much data or formats it in a way that does not fit your internal process, it is often more effective to build your own.

With modern LLMs, writing a custom client is less daunting than it appears. Since APIs for major platforms are well-documented, LLMs can generate the majority of the boilerplate code. The rule of thumb is simple: use public MCP servers for exploration, but build your own MCP server on top of the API as soon as you have a repeated, critical business workflow. This ensures you maintain control over the tools, the prompts, and the security boundaries.

The Future: Enterprise Registries and Observability

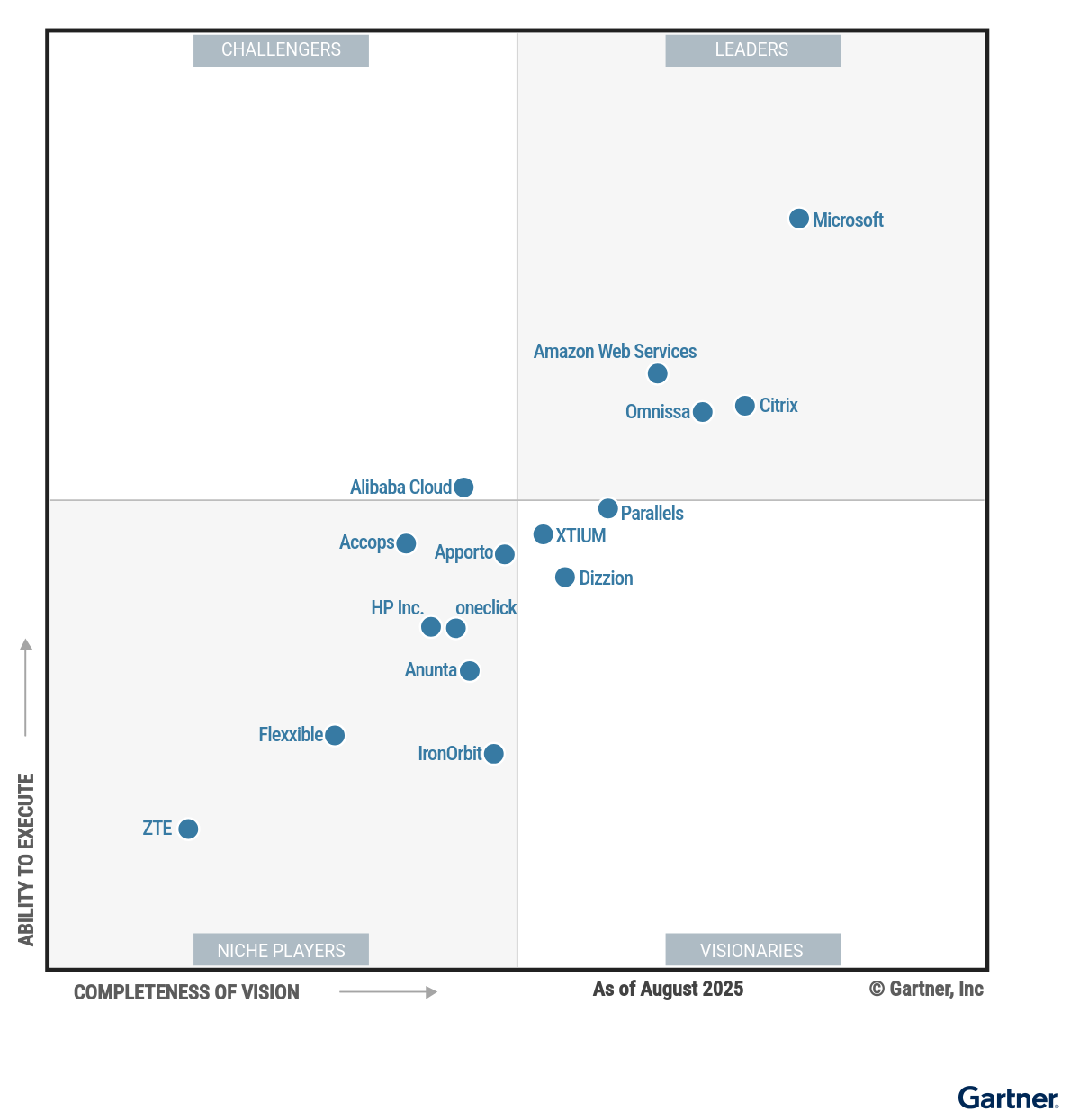

For large organizations, managing a fleet of MCP servers is the next challenge. We are seeing industry leaders build internal MCP registries and auditing software to manage this at scale. Microsoft is also streamlining this with Azure API Center and AI Gateway, which now support maintaining an inventory of remote MCP servers.

If you are building MCP servers for your team, observability is non-negotiable. By tracking User Agent strings in your server logs, you can identify who is using your tools and how. Whether you are piping logs into Datadog or leveraging Azure Monitor, understanding usage patterns is key to maintaining a healthy ecosystem.

At FlowDevs, we specialize in implementing securely architected AI solutions and custom integration strategies. If you are looking to build a robust MCP strategy or need help securing your intelligent automation workflows, we are here to help.

Ready to streamline your complex workflows? Book a consultation with us today at bookings.flowdevs.io.

One of the most frequent questions we encounter when discussing the Model Context Protocol (MCP) is straightforward: Does this tool actually support it? The current landscape of MCP adoption is a mixed bag, but one that is rapidly evolving. When looking at popular developer tools, there are promising signs of integration.

Cursor generally offers robust support, which is excellent news given its rising popularity among developers. Similarly, platforms like Postman and Tombow are integrating these capabilities. However, a significant number of tools still lack deep integration, specifically regarding elicitation features.

The Elicitation Gap in MCP

Elicitation is the ability for an interface to ask the user for missing information required by a tool. This is a critical feature of the MCP specification, designed to stop the "guesswork" of Large Language Models (LLMs) by allowing them to ask clarifying questions rather than making assumptions.

While VS Code and Cursor handle this well by creating seamless user interfaces for these interactions, support is variable elsewhere. If your target users live in VS Code or Cursor, you can confidently leverage elicitation to build interactive workflows. However, outside these environments, the user experience can be fragmented.

Copilot Studio and Privacy Concerns

A common friction point arises with Copilot Studio and Microsoft Copilot. Developers often ask how to call a third-party MCP server while keeping the query private within their Microsoft tenant. The reality is complex. To use a third-party MCP server, you generally must expose the question to that server. There is currently no simple method to "mask" the prompt while still retrieving the necessary tool output.

For privacy-conscious enterprises, this is a critical consideration. While usage inside Edge or isolated environments offers some protection, connecting Copilot to external MCP servers requires a careful audit of data flow.

Security in the Age of Autonomous Agents

As we move toward autonomous multi-agent solutions, the conversation inevitably turns to risk. There is a running joke in the industry that the "S" in MCP stands for security, implying that it is often an afterthought. However, for enterprise adoption, security must be the priority.

We recommend looking at the concept of the "Lethal Trifecta" regarding AI security. Significant danger arises when you combine three specific factors:

- Access to private data: The agent can read sensitive information.

- Write operations: The agent has the ability to change, delete, or modify systems.

- Untrusted user input: The agent accepts prompts from external or unverified sources.

If your agent possesses all three of these characteristics, you are in the danger zone.

Practical Mitigation Strategies

To safely deploy MCP-enabled agents, consider these mitigation strategies:

- Read vs. Write Separation: Be extremely cautious about granting write permissions. Reading a private repository is a secure operation; allowing an agent to comment on a public thread with data from that private repository creates a data leak risk.

- Granular Permissions: When creating access tokens (such as a GitHub PAT) for an agent, scope it strictly. Limit the token to specific repositories and specific actions, such as "Issues: Read Only."

- Strict Isolation: Avoid running agents directly on production machines or main development environments. Use Dev Containers, GitHub Codespaces, or isolated VMs. For high-security tasks, we utilize Azure Container Apps dynamic sessions to create completely sandboxed environments that are destroyed immediately after use.

- The "No-Go" List: Configure settings to require human approval for specific commands. If an agent has CLI access, ensure destructive commands (like deleting resource groups) are flagged for mandatory user review.

Building vs. Buying: When to Write Your Own MCP Server

Developers often look to the VS Code marketplace or public registries to find existing MCP servers for tools like GitHub, Postgres, or Datadog. These are excellent resources for prototyping and handling one-off workflows. However, when you need enterprise control, relying on public wrappers may not be sufficient.

Most available MCP servers are simply wrappers around a public API or SDK. If the public GitHub MCP server exposes too much data or formats it in a way that does not fit your internal process, it is often more effective to build your own.

With modern LLMs, writing a custom client is less daunting than it appears. Since APIs for major platforms are well-documented, LLMs can generate the majority of the boilerplate code. The rule of thumb is simple: use public MCP servers for exploration, but build your own MCP server on top of the API as soon as you have a repeated, critical business workflow. This ensures you maintain control over the tools, the prompts, and the security boundaries.

The Future: Enterprise Registries and Observability

For large organizations, managing a fleet of MCP servers is the next challenge. We are seeing industry leaders build internal MCP registries and auditing software to manage this at scale. Microsoft is also streamlining this with Azure API Center and AI Gateway, which now support maintaining an inventory of remote MCP servers.

If you are building MCP servers for your team, observability is non-negotiable. By tracking User Agent strings in your server logs, you can identify who is using your tools and how. Whether you are piping logs into Datadog or leveraging Azure Monitor, understanding usage patterns is key to maintaining a healthy ecosystem.

At FlowDevs, we specialize in implementing securely architected AI solutions and custom integration strategies. If you are looking to build a robust MCP strategy or need help securing your intelligent automation workflows, we are here to help.

Ready to streamline your complex workflows? Book a consultation with us today at bookings.flowdevs.io.

Related Blog Posts

Forecasting the Future: Microsoft Power Platform 2026 Release Wave 1

From Chatbots to Agents: Building Autonomous Workflows with Microsoft Copilot Studio

.jpg)